AI is My Bestie: Integrating LLMs Into Your Hunt Team

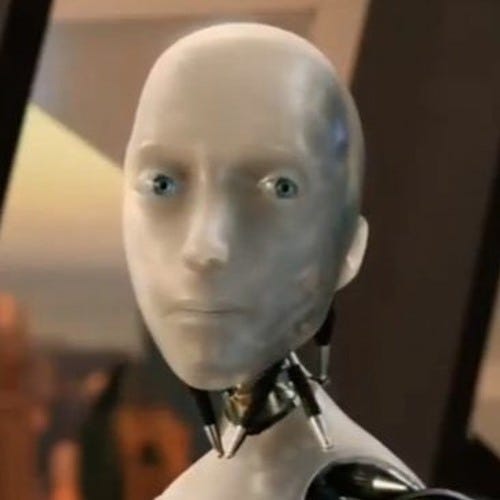

But she’s still not allowed to run queries without supervision.

AI is my new bestie. She helps write Splunk queries, translates TTPs from dozens of threat intel articles at once, and summarizes logs faster than I can scroll to the bottom of a JSON blob. But like any bestie, she’s unpredictable, occasionally chaotic, and still occasionally needs me to double check what she wrote before she hits send.

I’ve seen LLMs speed up real work when used in the right way, and I’ve also seen them fail in ways that only make sense if you’ve been burned by a hallucinated query. This post isn’t about hype. It’s about how we actually use AI to thrunt: where it can help, how it can burn, and why it absolutely still needs a human in the loop.

Let’s be honest, most threat hunters didn’t ask for an AI bestie. Nobody put “talk to a chatbot” in our five year career goals.

But once we started experimenting, we found real utility. Not in replacing work, but in getting to the interesting stuff faster.

Here’s where AI actually earns its spot on the team:

It speeds up the boring parts. We’re still writing our own detections, we don’t have to spend 20 minutes debugging KQL syntax from memory though.

It helps junior hunters move faster. Instead of waiting on someone to explain a TTP or translate a Splunk query, they can ask AI and get moving.

It jumpstarts creative thinking. Sometimes you need to break out of your own logic loop. Asking an LLM for ways a threat actor might move through Azure AD can spark the “what if” that leads to a great hunt.

It reduces cognitive drain. Summarizing five CISA reports before a coffee break? Yes, please. AI can help distill the noise into something huntable.

This isn’t magic, more like mechanical support. A speedy, robotic sidekick that can help sift through the trillions of bits and bytes to make that haystack a little bit smaller.

The Big Buckets of Thrunting with AI

This isn’t some shiny all-purpose assistant. AI is only as helpful as the problem you give it and the judgment you apply after. Let’s look at the core areas you can leverage AI for in threat hunting.

Hypothesis Generation 🧠

Use AI as a sounding board, to escape bias loops, or think more like an attacker.

Generate hunt hypotheses from threat actor reports

Brainstorm alternative explanations for observed behaviors

Simulate adversary actions based on specific environments

Translate vague “weirdness” into structured hunt paths

Break tunnel vision views of threats

Query & Detection Drafting 🧪

Let an LLM draw the outline and let your threat hunters color inside the lines.

Write Sigma/KQL/Splunk/XQL rules from natural language prompts

Convert intelligence blog TTPs or government advisories into query logic

Explain unfamiliar detection rule syntax

Generate rule variants for different log sources

Threat Intel Summarization 📚

Even AI may not have time for the infinite number of intel reports that are being generated.

Summarize longform threat intel reports into digestible bullets

Extract MITRE techniques and associated IOCs

Suggest hunt ideas based on recent campaigns

Translate high-level narrative into huntable leads

Log Triage and Anomaly Highlighting 🪵

Use a robot to identify patterns and anomalies. Make sure to follow your data privacy and acceptable use policies!

Highlight unusual or rare values across log fields

Summarize long JSON blobs into key actions/events

Compare patterns across user or host behaviors

Flag suspicious combinations (e.g., parent-child process chains)

Reporting and Communication 🗣

Ask your friend Chat to make sense of your mess of notes and artifacts. Because you are keeping copious notes right?

Turn analyst notes into narrative summaries

Draft post-hunt debriefs or “lessons learned” writeups

Rephrase technical findings for executive or non-technical audiences

Clean up internal documentation or handoffs between teams

Scaling Tribal Knowledge 🤓

Explain unknown detection tools or formats

Translate across detection platforms (e.g., Splunk → Sentinel)

Provide guidance when stuck (e.g., “What else could this indicate?”)

Fill in for “let me Google that for you” without slowing the team

Girl, That’s Not a Field in Our Logs

AI can be helpful. But before you get all Kris about it,

You should know it can also be a menace with main character energy and zero accountability.

Let’s talk about common pitfalls and how AI can betray us:

Hallucinated Queries That Look Legit

Just because ChatGPT can write a Sigma rule doesn’t mean it should. We’ve seen beautifully formatted detections that reference fields we don’t even ingest and unless you catch it, you’re going to be saying the coast is clear with zero results.

No Understanding of Your Environment

AI doesn’t know your baselines, log coverage gaps, or business logic. It’ll happily assume you're collecting events you’ve never onboarded and build queries or detections that completely miss how your systems actually work.

Overconfidence

When the AI sounds right, it’s easy to believe it. LLMs are a source, not a shortcut. It may say things that are inaccurate or even patently false. Always check what is getting returned to you with your own research and understanding.

Curiosity Collapse

Threat hunting is about creative friction by following strange patterns and asking "what if?" AI can short-circuit that process by offering an answer too early, flattening a thread that should’ve unraveled.

Data Exposure Risks

Tempted to paste a log straight into ChatGPT? Don’t. Unless you’re using a secured, private LLM, you’re risking more than bad output, you’re risking breach territory. I am not your lawyer, but check with your company policy before putting any kind of sensitive data, including company context, into an AI.

I’m not anti-AI. I’m anti-letting-an-unfiltered-LLM-write-queries-without-supervision.

When used intentionally, AI can boost your team’s speed, lower barriers for hunters, and help everyone get to the “interesting stuff” faster. But it’s not a teammate, it’s a tool. A very fast, very confident, occasionally unhinged tool.

If you’re bringing LLMs into your hunt workflow, make sure you establish rules of engagement:

What can AI help with?

What should it never touch?

When is human review required?

Where is it useful vs. risky?

What are the red flags for hallucinations?

AI isn’t going away anytime soon, so now is the time to use it. Experiment with it. Integrate it into some of your less risky workflows and see your hunting grow to the next level.

I have to ask: Which llm do you prefer?

Nice article!