Detection-In-Depth

Eliminating detection blind spots through a multi-layered defense approach

Detection-in-depth is an evolution of the classic cybersecurity principle known as defense-in-depth. Defense-in-depth means that no single security control can fully protect an environment—instead, multiple layered defenses must work together to slow down, detect, and ultimately stop adversaries.

These layers create redundancy, ensuring that if one layer fails, another stands ready to catch the threat. Detection-in-depth applies this same layered philosophy specifically to detection and monitoring. Rather than relying on a single detection point, it ensures that adversary activity can be caught at multiple stages, through multiple methods, and across multiple levels of abstraction. This creates a resilient, overlapping detection strategy that minimizes blind spots and maximizes the chance of identifying attackers anywhere in their kill chain progression.

Since attackers operate across systems, identities, networks, and applications, your detection strategy must cover this entire landscape. The core purpose of detection-in-depth is to eliminate the blind spots that emerge from relying on single detection mechanisms, ensuring you can spot adversaries along their attack path before they cause significant damage.

Fine-Tuning Detections for Precision

A common question I hear about detection engineering is, "What can I actually do to make my detections better?" The answer starts with precision, and specifically, improving the ratio between false positives and true positives.

To improve precision, you must first baseline your environment. This means understanding what "normal" looks like, both technically and operationally. You need a clear picture of your business operations, users, infrastructure, and threat landscape. This requires thorough threat modeling, asset inventory, and deep familiarity with your attack surface.

Another important aspect of tuning that's often overlooked is the proper tuning of out-of-the-box (OOTB) detections. Having built numerous OOTB detections as a cloud threat detection engineer for a Cloud SIEM product, I've witnessed firsthand how raw, untuned detections rarely provide optimal value. The most successful customers were those who invested time in customizing and refining these baseline detections to match their unique environment.

Think of OOTB detections as a starting template. They provide a solid foundation but require customization to truly shine. Organizations have different tech stacks, business processes, and risk profiles. What might be suspicious activity in one environment could be perfectly normal in another. For instance, a detection for unusual admin activities needs to account for your specific admin patterns, maintenance windows, and automated processes.

The customers who got the most value from our OOTB detections were those who took the time to understand their normal baselines, adjusted thresholds accordingly, and added contextual enrichment specific to their environment. They treated these detections as living rules that needed regular refinement rather than static, set-and-forget configurations. This approach significantly reduced false positives while maintaining high detection efficacy for real threats.

Remember that precision doesn't mean perfection (we'll never have perfect detections). Instead, it means your detections are accurate enough to catch real threats when it matters most. What truly catches adversaries is consistent accuracy developed through continuous refinement, iteration, and tuning, not chasing after a perfect rule.

Thinking About Evasion Possibilities

Another critical mindset shift is constantly asking yourself, “If I were the attacker, how would I evade this detection?”

It’s important to realize that the effort you put into building and refining your detections is often matched by the effort adversaries put into avoiding them. Especially when dealing with advanced threats, stealth is the name of the game. Their success hinges on staying undetected long enough to achieve their objectives.

To put this into perspective, Mandiant's M-Trends 2021 report revealed that the average dwell time (the duration an attacker remains undetected in an environment) was approximately 24 days. While this marks an improvement from previous years, it still remains a dangerous window of vulnerability. An adversary rarely needs 24 days to inflict serious damage. They might accomplish their objectives within hours or even minutes. By the time they've lingered for 24 days, they've likely already achieved their goals and are simply maintaining persistence.

The longer it takes to detect an adversary, the more opportunity they have to map your systems, escalate privileges, and position themselves for a devastating impact. Every day, every hour, they remain undetected gives them a greater advantage. Detection-in-depth, backed by continuous refinement, is your counterweight against that stealth.

Addressing Blind Spots & Improving Visibility

Improving your detection capabilities also means ruthlessly addressing your visibility gaps. Lack of visibility is one of the biggest inhibitors to effective detection. But getting better visibility isn’t just about adding more logs or tools, it’s about understanding the layers of abstraction that exist in your environment and reducing the complexity that blinds you.

Think of it like this: In the real world, if you're trying to see something clearly, you need to be at the right distance. If you’re too close, your view is too narrow and you miss the bigger picture. If you’re too far, the details become blurry. But if you’re at the right distance, with the right focus, you can see clearly. And just like we use glasses to correct poor vision, in cybersecurity we use centralized telemetry and observability platforms to correct for gaps in our environmental awareness.

For cloud environments especially, clarity comes from centralizing your data. You do this by aggregating logs, API calls, resource behaviors, and identity activities across all facets of your architecture. A well-architected observability and detection platform allows you not just to see the big picture but also to drill down into specific resources when needed. It’s not enough to see the environment broadly, you need to be able to pivot quickly into detail when a signal demands it.

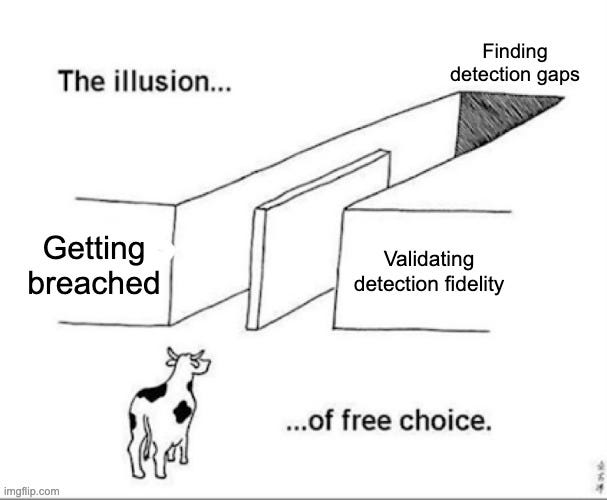

Maintaining and Validating Detection Fidelity

Even after you've built your detections and visibility pipelines, the work isn't over, it's just getting started. One of the most overlooked disciplines in detection engineering is ensuring that your detections actually continue to work over time.

The reality is that environments evolve, threats change, and if you simply “set it and forget it,” your detections will eventually fail you. Instead, you need a mindset of "set it and continuously test it." Your detection is not a static product, it's a living, breathing process.

This is where threat emulation comes into the picture, and it's one of the most important yet underutilized practices in detection engineering today. Threat emulation involves taking techniques that real adversaries use and safely simulating them in your environment to verify if your detections work as intended.

Tools like Atomic Red Team and Stratus Red Team make this accessible without requiring a full red team. Rather than guessing whether a detection will trigger during an incident, you actively run controlled simulations like credential theft, privilege escalation, persistence techniques, and observe your environment's response. When a detection fires incorrectly or misses entirely, consider it a win because you've discovered gaps before an attacker could exploit them.

Threat emulation pushes you beyond assumptions by validating that your detection rules, telemetry pipelines, and incident response playbooks work as designed. It moves you from theory into reality and helps strengthen your detection-in-depth strategy through battle testing, not just theoretical design.

But as valuable as threat emulation is for sharpening your detections, it’s important to keep it in perspective and understand where it fits alongside other adversary simulation practices. Threat emulation isn't (and should not be) a replacement for full-scale penetration testing or dedicated red team operations.

It’s important to recognize that while emulation gives you fast, focused feedback on specific detection gaps, it doesn’t fully replicate the creativity, unpredictability, and adaptive decision-making of a real human adversary. True penetration testing and red teaming still play a critical role because they expose not just technical gaps, but also organizational and procedural weaknesses in a way that threat emulation alone won't always uncover.

In fact, the findings from red team exercises and penetration tests can be some of the best sources of feedback for strengthening detection-in-depth strategies. They force you to think beyond signatures and behavioral patterns and challenge you to detect stealthier, more dynamic attack paths that don't always map neatly to known techniques.

Either way, Stop Guessing, Start Testing.

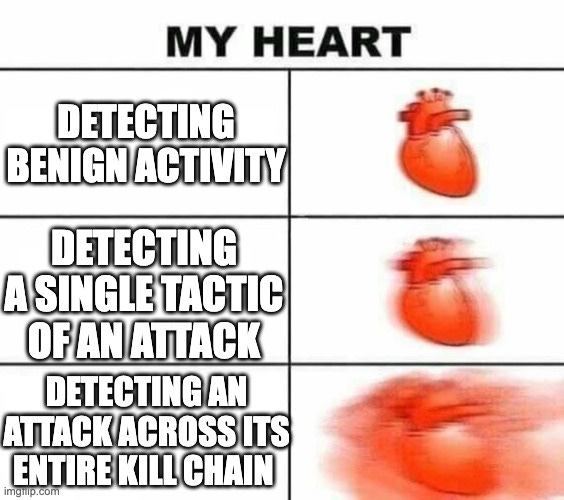

Detecting Across the Kill Chain

With detection-in-depth, the ultimate goal is to detect an adversary across multiple stages of the attack kill chain and not just at the initial compromise. It's about having layers of telemetry and detections that track the adversary’s movement from initial access, to persistence, to lateral movement, and beyond.

A strong detection strategy doesn’t just catch the first exploit attempt. It builds tripwires across every stage of an attack. For example, even if an attacker successfully spear-phishes a user and gains initial access, your environment should have detections waiting for when they attempt privilege escalation, modify registry settings, use unusual service creation techniques, or move laterally using compromised credentials.

Maybe you miss the phishing email itself, it’s okay, that happens. But you catch the new abnormal Kerberos ticket request, or the suspicious PowerShell execution that follows. Each layer gives you another shot at catching them before they reach their objective. Detection-in-depth turns every step the adversary takes into a potential point of failure for them and not for you.

Expanding further on this, in cloud environments, the idea of detecting across the kill chain becomes even more critical, and even more challenging, because attackers can move fast once they establish a foothold. Detection-in-depth in the cloud means catching an adversary not just at initial access, but at every pivot point that follows.

For example, say an attacker compromises a workload by exploiting a misconfigured IAM role or leaked identity access keys. Maybe that initial compromise slips through, but if your detection-in-depth strategy is solid, you’ll have controls that catch the next moves: the unusual API calls to escalate privileges, the creation of new IAM users, the modification of security groups to open up unauthorized traffic, or the sudden spike in S3 object access requests.

Even if you miss the first move, every subsequent action should trigger a new opportunity for detection because in cloud-native architectures, every API call, permission change, network modification, and data access request is a breadcrumb, and a properly layered detection strategy makes sure those breadcrumbs light up before an attacker ever gets close to exfiltrating sensitive data.

Final Thoughts

Ultimately, detection-in-depth is the understanding that compromise is inevitable, but catching it early enough to stop real damage is absolutely possible if you build layered, resilient detection systems. Regardless of if you're defending traditional infrastructure or cloud-native environments, the principle stays the same: every step an attacker takes should increase their risk of being seen.

Detection-in-depth, threat emulation, and adversary-informed testing aren't just optional enhancements, they're the foundation of building detection that actually holds up under real-world pressure. Because in cybersecurity, it's not just about who has the best defenses and fanciest shiny new tools. It’s about who can catch the adversary when it matters most.