You Can't Find Weird If You Don't Know Normal

Five baselines with hunt queries you can run today

Quick test: Do you actually know what “normal” looks like in your environment?

How many failed logins do you expect on a Tuesday morning?

Which servers should be talking to the internet after midnight?

What’s the usual weekend data transfer from your database servers?

If you can't answer these, you're not hunting. You're hoping.

This post turns that hope into a practical, repeatable workflow. Map normal. Track drift. Catch threats.

Part 1: What Baselining Really Is (And Why Most Teams Fail)

Baselining is building a map of "normal" so you can recognize "abnormal."

If you do not know your neighborhood, every car looks suspicious. Once you know Tim down the street drives a red Toyota and leaves for work at 7:30 AM, you notice when a white van circles the block at 3 AM.

Most teams fail at baselining because they:

Try to baseline everything and end up with nothing

Build once and never update

Obsess over technical signals and ignore business rhythms

Chase perfect models instead of usable ones

Store results in spreadsheets nobody ever open

The truth:

Baselines are living documents, not stone tablets

80 percent accuracy you use beats 100 percent perfection you never ship

Business context matters more than technical precision

Small, focused baselines are more useful than giant comprehensive ones

If you are not using it weekly, it is not a baseline

Part 2: The Five Baselines Every Hunter Needs

1. Temporal Baselines - The Rhythm of Your Network

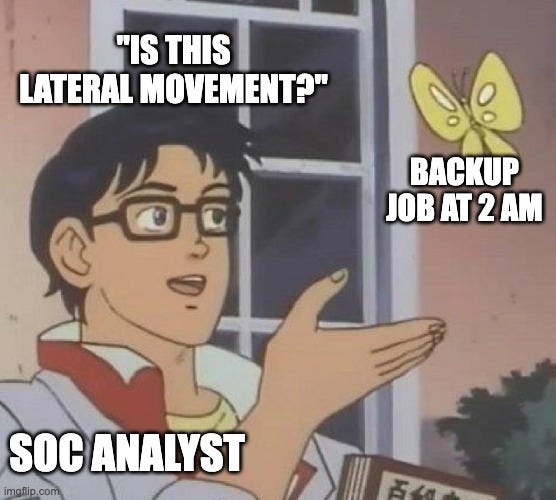

Track activity across hours, days, and business cycles so you can quickly spot logins, jobs, or traffic that happen outside expected windows.

Quick Win Hunt (Splunk SPL):

index=thrunt sourcetype=auth earliest=-24h@h

```Extract hour from timestamp and identify business context```

| eval hour=strftime(_time,"%H")

| eval is_business_hours=if(hour>=7 AND hour<=19, "yes", "no") ```Define 7AM-7PM as normal```

| eval is_weekend=if(strftime(_time,"%w") IN (0,6), "yes", "no") ```0=Sunday, 6=Saturday```

```Filter to only off-hours activity on weekdays```

| where is_business_hours="no" AND is_weekend="no"

```Exclude known service accounts and automation```

| where NOT match(user, "(backup_svc|monitoring_agent|scheduled_task)")

```Aggregate suspicious activity```

| stats count by user, hour, src_ip

| where count > 5 ```Flag users with >5 off-hours authentications```

| sort -count2. Volume Baselines — Finding the Balance

Measure how much data, logins, or queries are “just right” so you can flag activity that spikes far above or drops far below normal.

Volume Anomaly Hunt (Splunk SPL):

index=thrunt sourcetype=network earliest=-7d@d

```Create hourly buckets for analysis```

| bucket span=1h _time

| stats sum(bytes_out) as hourly_bytes by _time

```Calculate statistical thresholds based on historical patterns```

| eventstats avg(hourly_bytes) as avg_bytes, stdev(hourly_bytes) as stdev_bytes

```Set alert thresholds at 2 and 3 standard deviations```

| eval threshold_yellow=avg_bytes+(stdev_bytes*2) ```~95% confidence```

| eval threshold_red=avg_bytes+(stdev_bytes*3) ```~99.7% confidence```

```Filter to only anomalous hours```

| where hourly_bytes > threshold_yellow

```Classify severity for prioritization```

| eval severity=case(

hourly_bytes > threshold_red, "CRITICAL",

hourly_bytes > threshold_yellow, "WARNING",

1=1, "OK")

| table _time, hourly_bytes, avg_bytes, threshold_yellow, threshold_red, severity

| sort -hourly_bytes3. Behavioral Baselines — Users and Systems in Context

Understand how different roles and systems normally act so you can catch when a developer behaves like finance, or an exec suddenly uses PowerShell.

Behavioral Anomaly Hunt (Splunk SPL):

index=thrunt user=* earliest=-24h@h

```Classify users by their role based on naming convention or their team```

| eval user_type=case(

match(user,"dev_"), "developer", ```dev_* = developer accounts```

match(user,"fin_"), "finance", ```fin_* = finance team```

match(user,"exec_"), "executive", ```exec_* = executives```

1=1, "standard") ```Everything else```

```Define suspicious cross-role behaviors```

| eval suspicious=case(

user_type="executive" AND match(process,"(powershell|cmd|ssh|putty)"), "EXEC_USING_TECHNICAL_TOOLS", ```Execs shouldn't use shells```

user_type="finance" AND match(dest_host,"dev-"), "FINANCE_ACCESSING_DEV", ```Finance shouldn't touch dev systems```

user_type="developer" AND match(dest_host,"fin-"), "DEV_ACCESSING_FINANCE", ```Devs shouldn't access finance systems```

1=1, "normal")

```Filter to only suspicious activity```

| where suspicious!="normal"

```Aggregate for analysis```

| stats count by user, suspicious, process, dest_host

| sort -count4. Network Communication Baselines — Who Talks to Whom

Map which systems normally communicate so you can flag lateral movement, direct database access, or servers reaching the internet when they never should.

Network Segmentation Violation Hunt (Splunk SPL):

index=thrunt sourcetype=network earliest=-24h@h

```Map IPs to network zones based on CIDR ranges for your org```

| eval src_zone=case(

cidrmatch("10.1.0.0/16", src_ip), "workstations", ```User subnet```

cidrmatch("10.2.0.0/16", src_ip), "servers", ```Server subnet```

cidrmatch("10.3.0.0/16", src_ip), "databases", ```Database subnet```

cidrmatch("192.168.1.0/24", src_ip), "dmz", ```DMZ subnet```

1=1, "unknown")

| eval dst_zone=case(

cidrmatch("10.1.0.0/16", dst_ip), "workstations",

cidrmatch("10.2.0.0/16", dst_ip), "servers",

cidrmatch("10.3.0.0/16", dst_ip), "databases",

cidrmatch("192.168.1.0/24", dst_ip), "dmz",

1=1, "external") ```Anything else is internet```

```Create flow description for analysis```

| eval flow=src_zone+" -> "+dst_zone

```Flag violations of network segmentation policy```

| eval is_suspicious=case(

flow="workstations -> workstations", "LATERAL_MOVEMENT", ```Workstation to workstation = potential lateral movement```

flow="databases -> external", "DATA_EXFIL", ```DB servers should never talk directly to internet```

flow="workstations -> databases", "DIRECT_DB_ACCESS", ```Users shouldn't bypass app layer```

flow="dmz -> servers", "DMZ_BREACH", ```DMZ should be isolated from internal```

1=1, "normal")

```Filter and aggregate suspicious flows```

| where is_suspicious!="normal"

| stats count by src_ip, dst_ip, dst_port, is_suspicious

| sort -count5. Process and Application Baselines — What Runs Where

Define which processes belong on which hosts so you can quickly spot browsers on domain controllers, shells on databases, or recon tools on web servers.

Process Anomaly Hunt (Splunk SPL):

index=thrunt sourcetype=endpoint process_name=* earliest=-24h@h

```Classify hosts by their role based on naming convention```

| eval host_type=case(

match(host,"DC"), "domain_controller", ```DC* = Domain Controllers```

match(host,"WEB"), "web_server", ```WEB* = Web servers```

match(host,"DB"), "database_server", ```DB* = Database servers```

match(host,"WS"), "workstation", ```WS* = Workstations```

1=1, "unknown")

```Define what processes shouldn't run where```

| eval is_suspicious=case(

host_type="domain_controller" AND match(process_name,"(chrome|firefox|notepad)"), "DC_SUSPICIOUS_PROCESS", ```DCs shouldn't browse web```

host_type="web_server" AND match(process_name,"(net\.exe|wmic|reg\.exe)"), "WEB_RECON_TOOLS", ```Web servers shouldn't run recon```

host_type="database_server" AND match(process_name,"(powershell|cmd)"), "DB_SHELL_ACCESS", ```DBs shouldn't have shell access```

1=1, "normal")

```Filter and aggregate violations```

| where is_suspicious!="normal"

| stats count by host, process_name, user, is_suspicious

| sort -countPart 3: The Baseline Hunt Playbook

The Weekend Warrior Hunt

Find activity that only happens on weekends. This can expose persistence mechanisms or accounts that hide in off-hours.

SPL:

index=thrunt earliest=-30d@d

```Classify each day as weekend or weekday```

| eval day_type=if(strftime(_time,"%w") IN (0,6), "weekend", "weekday")

```Count unique users per process, grouped by day type```

| stats dc(user) as unique_users values(user) as user_list by day_type, process_name

```Pivot to compare weekend vs weekday activity```

| xyseries process_name day_type unique_users

| fillnull value=0 ```Replace nulls with 0 for comparison```

```Find processes that ONLY run on weekends```

| where weekend>0 AND weekday=0

| table process_name, weekend

| sort -weekendThe Gradual Change Hunt

Catch gradual increases that might otherwise fly under the radar, like data exfil creeping upward each week.

SPL:

index=thrunt sourcetype=network earliest=-30d@d

```Create daily buckets for trend analysis```

| bucket span=1d _time

| stats sum(bytes_out) as daily_bytes by _time

```Calculate 7-day rolling average for comparison```

| streamstats window=7 avg(daily_bytes) as week_avg

```Calculate percentage change from rolling average```

| eval week_over_week_change=((daily_bytes-week_avg)/week_avg)*100

```Flag increases over 20%```

| where week_over_week_change > 20

```Classify severity of increase```

| eval concern=case(

week_over_week_change > 50, "CRITICAL - 50%+ increase", ```Major spike```

week_over_week_change > 30, "HIGH - 30%+ increase", ```Significant increase```

week_over_week_change > 20, "MEDIUM - 20%+ increase", ```Notable increase```

1=1, "LOW")

| table _time, daily_bytes, week_avg, week_over_week_change, concern

| sort -week_over_week_changeThe New Normal Hunt

Spot behaviors that recently became common but were not before. This can show attacker activity that has normalized into the background.

SPL:

| multisearch ```Compare two time periods to find new behaviors```

[ search index=thrunt earliest=-7d@d latest=now | eval timeframe="this_week" ] ```Current week```

[ search index=thrunt earliest=-37d@d latest=-30d@d | eval timeframe="month_ago" ] ```Same week, month ago```

```Count distinct processes per host in each timeframe```

| stats dc(process_name) as process_count values(process_name) as processes by timeframe, host

```Pivot to compare timeframes side by side```

| xyseries host timeframe process_count

| fillnull value=0 ```Handle missing data```

```Calculate how many new processes appeared```

| eval new_processes=this_week-month_ago

```Flag hosts with significant new activity```

| where new_processes > 5 ```More than 5 new processes is suspicious```

| table host, month_ago, this_week, new_processes

| sort -new_processesEach is baseline-driven and ready to run.

Conclusion

Baselining is not about perfection. It is about knowing your environment well enough to feel when something is wrong. The best baseline is the one you actually use.

Start small, add context, and build until your intuition does the work for you.

Next: Part 2 will walk you through implementing these baselines in 4 weeks, from zero to production-ready hunts.